🫴🦋 Is this an emoji?

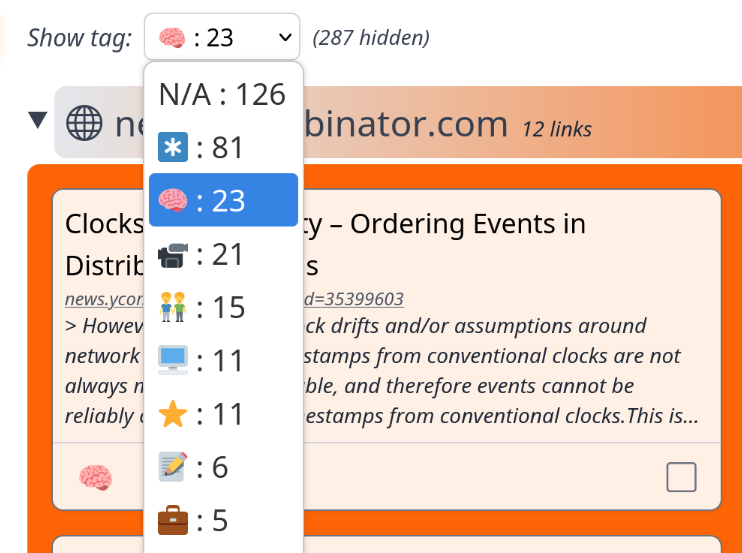

ReadStuffLater uses emojis to tag content †. It's simple, it's fun, and it affords basic content organization without encouraging users to spiral into reinvent-Dewey-Decimal territory.

There's just one problem: data validation. When the client tells my server to tag a record, how can the server confirm the tag is actually an emoji? I mean, I shouldn't accept and store just anything in that field, right?

This is a much gnarlier problem than it has any right to be. If you want the TL;DR, see what I did and what I wish I'd done, and an improved regex solution!

Failed idea #1: Use Regex character classes

My first thought was to google around for this, and everyone recommends regex! Everyone! Well that seemed easy.

There is a recent(?) extension to regex that lets you specifically ask, "is this an emoji?"

Except it's wrong. And also not available everywhere.

const regex = /^\p{Emoji}$/gu;

console.log("🙂".match(regex))

console.log("*️⃣".match(regex));

console.log("👨🏾".match(regex));

> Array ["🙂"]

> null

> null

I mean, it produces kinda-okay results if you ask "does this string contain any number of emojis". But it fails hard when you ask "Is this string made of exactly one emoji, and nothing else?".

Also, it seems Postgres regex doesn't support these special character classes, so validation would be strictly at the application layer.

EDIT: Someone showed how to patch some of the holes in this approach and make it work. Check it out below!

Why does the regex give the wrong answer?

I'm glad you asked! It turns out there isn't really such a thing as "an emoji". You have code points, and code point modifiers, and code point combinations.

A great primer on this is Bear Plus Snowflake Equals Polar Bear.

Here's the dealio: Let's say we want to display the emoji for a brown man, "👨🏾". There isn't a code point for that. Instead we use "👨 ZWJ 🏿".

ZWJ is "zero-width joiner". It's a Unicode byte that gets used in I guess the Indian Devanagari writing system? But it's also a fundamental building block for emojis.

Its job is "when a mommy code point loves a daddy code point very much, they come together and make a whole new glyph".

Basically any emoji that includes at least 1 person who isn't a boring yellow person doing nothing is several characters stapled together with ZWJ. Some other things work this way too.

Some examples include: 👪 (man + woman + boy), 👩✈️ (woman + airplane), and ❤️🔥 (heart + fire).

(And flags are multiple code points that aren't connected by ZWJ! ††)

(If your computer doesn't have current or exhaustive emoji fonts (thanks, Linux!), you might see what's supposed to be a single glyph instead displayed as several emojis side by side, like how my computer shows "Women With Bunny Ears Partying, Type-1-2" as " 👯 🏻 ♀️".)

So our regex can't just check if a string is an emoji: many things we want to identify are several emojis stapled together.

(The way you want to think about your goal here is "graphemes" and "glyphs", not "characters".)

Fortunately, when I experimented, it looked like you have to join characters in a specific order, so when you add both skin tone and hair color ("👱🏿♂️") you can count on it happening in exactly one canonical byte sequence. Otherwise, we'd have to dive into Unicode normalization (a good topic to understand anyway!).

Edit: Someone showed me how to make this work. Check it out below!

Failed idea #2: Use Regex character ranges

Alright, so we can't just use the special regex "match me some emoji" feature. What about a regex full of Unicode character ranges? StackOverflow sure loves those!

Well, they're all either too broad or too narrow.

You get stuff like "just capture anything that's a 'Unicode other symbol'" (/\p{So}+/gu). This fails for the same reasons as approach #1, and also for the bonus reason that this character class includes symbols that aren't emojis ('❤').

Ah, but some other StackOverflow answer says to just use a regex for Unicode code points! That also fails the same way as approach #1, plus, nobody includes exhaustive code point ranges in their SO answers.

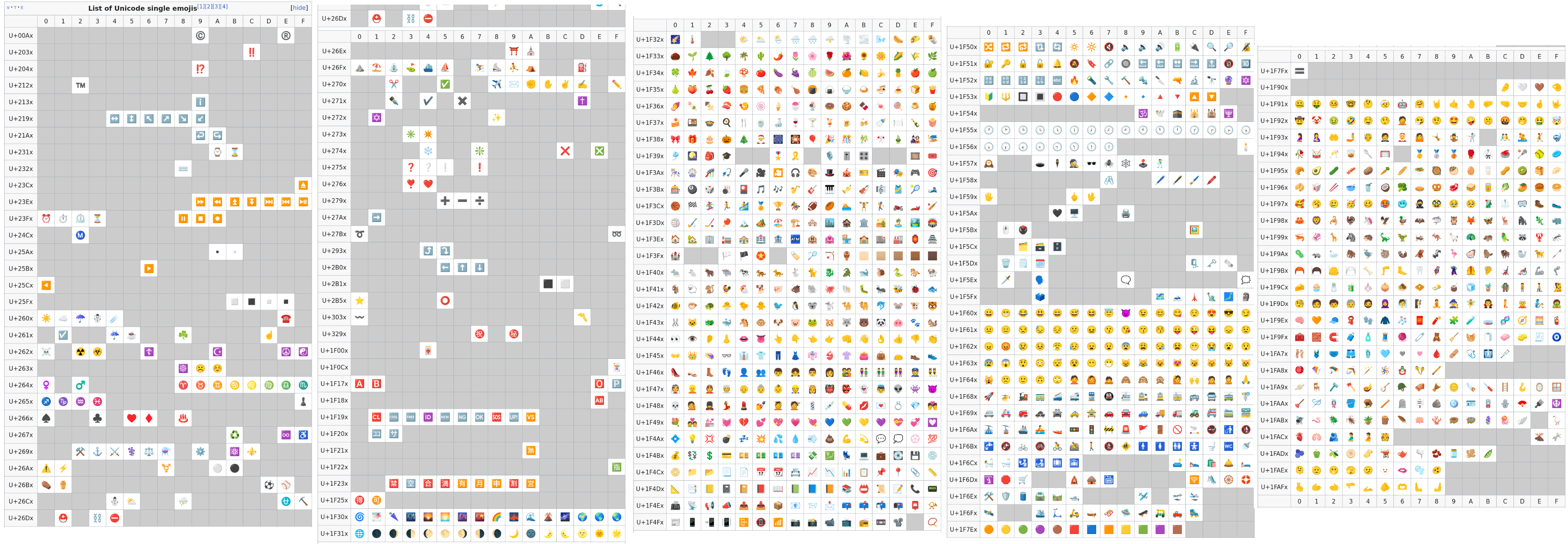

Here's a partial list of valid emoji:

Two things to note:

-

There are quite a few code ranges that include emoji! Not just handful that all the StackOverflow answers include. If you want zero false positives, you need (eyeballing it) a hundred code point ranges.

-

See all those grey empty spaces? That's non-emoji characters that are in those same code point ranges. You probably don't want to accept "ª", "«", etc. as emoji.

So you're either including a bajillion micro-ranges, or a handful of very wide ranges that will give false positives, or you're rejecting valid emoji.

And once you pick some ranges, I have no idea whether they'll include the new emoji the standard adds each year.

So validating code point ranges is a terrible approach. It's just plain wrong: emojis aren't individual code points in the first place, and you'll get a huge number of either false positives and false negatives.

Oh, don't forget that JavaScript uses UTF-16, while everything else in the world uses UTF-8. If you're building a regex with Unicode code points, all your hardcoded numbers will be different.

Failed idea #3: just stuff all possible emojis into a regex

Alright, so what if I just get a list of EVERY POSSIBLE EMOJI, and build a regex out of them like /🙂|😢|😆/. It's exhaustive, it's accurate, and it'll match individual, whole glyphs.

Except... *️⃣ broke my regex, because it's not its own symbol: it's a regular asterisk followed followed by other stuff: "* + VS16 + COMBINING ENCLOSING KEYCAP".

VS16 is the Unicode byte that says "Hey, this character can either look like text or like an emoji, please show it as emoji".

Regex wasn't happy about that - all it saw was a random asterisk in my pattern and it threw a fit.

I mean, even the markdown engine for this blog post mistook that as "please make the rest of my post italic" until I put the emoji into a code block.

But maybe I was on the right track trying to exhaustively match all emoji?

What worked for me

What I finally came up with was exhaustively validating emoji shortcodes instead of emojis themselves. Shortcodes are those things you type into Github or Slack to summon the emoji popup - e.g., ":winking_face:".

The great part about shortcodes is they're strictly simple characters. Off the cuff, I think it's all a-z and _. Unsure about numbers.

That makes them super convenient to store or pattern match on. Not so convenient for other reasons (see the next section).

So when a user picks out an emoji, I find its shortcode and store that in the database. When I display an emoji, I convert the other way.

To build my allowlist, I found an NPM package that holds the same data as the emoji picker I'm using. I wrote a script to extract all the shortcodes, generate all the appropriate variants, and turned that into a SQL list of values I could copy/paste.

I stuffed that into a database table and foreign key'd my records to it. (I previously used a CHECK constraint using IN, but that made schemas very noisy.)

I wrote the output of that file to disk and checked it into source control. Now every time I build the app I generate the data again and compare against the oracle, so if the package's list of valid emoji gets updated, I'll get a build failure until I update my allowlist.

Problem: solved ✅

What I wish I'd done instead

I should have done basically the same thing, except with the actual emoji. I used shortcodes because I got caught up in path dependence with the regex stuff. But if I'm already using a data structure of discrete strings, why not just use the emoji themselves?

There's a modest advantage in network / storage efficiency (why store lot bytes when few bytes do trick?), but the real advantage would be simplicity.

In the emoji dataset I have, an emoji like ":people_holding_hands:" 🧑🤝🧑 doesn't have different shortcodes for skin tone or hair color. It's just ":people_holding_hands:". Checking Emojipedia, I get the uncertain impression that shortcodes might not be standardized, and I see some tools have different shortcodes for skin tones, while others don't.

I had to make up my own encoding for that, including noticing the emoji might have zero skin tones (yellow figures), or multiple skin tones (two figures of different races).

I also have to do a lookup every time I display an emoji. In an ideal world, I'd lazy-load the emoji picker JS so it only downloads when the user actually wants to select an emoji.

But because I have to convert shortcodes to emoji, I have to load the picker Database on any page where I want to display an emoji, so I can figure out what glyph matches my stored data people_holding_hands:3:5.

If I were to revisit my implementation, I'd just store and validate straight-up emoji.

A more technical solution

Over on Lobsters, user singpolyma pointed out how to test a string without needing an oracle.

You use your language's tools to detect if the string is a single grapheme, and then you check if it either passes the Emoji regex character class, or contains the Emoji variant selector code point.

Here's what you do:

const isEmoji = (e: string) => {

const segmenter = new Intl.Segmenter();

const regex = /\p{Emoji_Presentation}/u;

const variantSelector = String.fromCodePoint(0xfe0f);

return Boolean(

Array.from(segmenter.segment(e)).length === 1 &&

(e.match(regex) || e.includes(variantSelector))

);

};

On my test data set, 229 out of 3,664 emoji fail the regex test by itself, such as ☺️, ☹️, ☠️, 👁️🗨️. But all of those contain the VS16 Emoji variant selector byte!

This means you use the grapheme count to tell "does this look like one glyph to the user?", then follow up with "does this either show as an emoji by default, or get converted into one?". All the safety, no oracles!

Well... mostly. It does mean any byte sequence containing VS16 will be accepted, which isn't the same thing as a valid emoji...

Intl.Segmenter is available everywhere except Firefox. And Postgres cannot count graphemes or use the Emoji regex character class, so you can only do application-level data validation. But you're free from managing an allowlist, so there's that.

Footnotes:

⏎ Your emoji picker includes country flags, but the Unicode Consortium doesn't want to take sides on whether Taiwan is a real country.

So they dodged the issue: if your text includes a 2-letter country abbreviation encoded as emoji letters, it might or might not display as a flag, depending on how your device feels.

So you're free to include "🇹 🇼" in your text, and if you just so happen to be in a country that doesn't find Taiwanese sovereignty objectionable, you'll see it displayed as 🇹🇼. Otherwise you'll just see 🇹 🇼.

EDIT: New info from Lobsters: it looks like my information is outdated! Or maybe wrong! I mean the part about how flags are rendered is correct, but the "they don't say which flags are valid" part might not be.

At some point the list of acceptable country flags got enumerated. That file dates back to 2015, and references a Consortium task seeking to clarify what "subtags" are valid. That Atlassian task is newer than the Github commits, so I guess its timestamp is a lie, leaving me unable to tell how early the enumeration took place.

However, "depictions of images for flags may be subject to constraints by the administration of that region."

I would have learned this factoid sometime around the Unicode 6.0 release in 2010, so maybe they started enumerating country codes later, or maybe I just learned wrong in the first place.

⏎ Why emoji and not a normal tagging system with arbitrary text?

I want ReadStuffLater to be a very low-friction, low-cognitive-overhead experience. It's not a place to organize your second brain; it's just read-and-delete.

Simply making rich organization available can make people feel like they're supposed to use it. And once people think that's the kind of app this is, they'll start expecting features that are expressly out of scope.

Yet once a user saves hundreds of links, they need something besides one giant list. This is my attempt to split the difference. And for product positioning purposes, I want to signal "do not expect this to be the same as Instapaper".

If a more second-brain-flavored reading list is what you need, I recommend Instapaper, Pocket, or Wallabag. They're a take on this problem with a stronger focus on long-term knowledge retention.